End-to-end Encrypted Shazam Using Fully Homomorphic Encryption

.png)

During the Zama Bounty Program Season 4, we asked the community to create a privacy-preserving version of Shazam using Fully Homomorphic Encryption (FHE) and Zama’s libraries. In this blog post, we are taking a look at the design of the winning solutions alongside their implementation:

🥇 1st place: A submission by Iamayushanand

🥈 2nd place A submission by GoktuEk

Introduction

There is no need to introduce Shazam. Indeed, despite being over 20 years old, it remains a top choice for music recognition. Today, song identification is not only used for recognizing a specific tune, it is also widely used in music streaming apps, as it enhances user experience by facilitating deeper exploration of music libraries and offering a more personalized listening experience.

At Zama, we are convinced that FHE is the future, paving the way for a new era of privacy-preserving applications. Considering Shazam's popularity, integrating FHE in its design shows how mainstream apps can benefit from it, without altering the user experience. And this is what we call privacy by design.

We also believe that it is the developers who will be in charge of safeguarding the privacy for millions of their users and this is why we are building in the open for them. The motivation behind the Zama Bounty Program is to give developers a platform to learn FHE and be rewarded for it. To date, we have distributed tens of thousands of euros in rewards. In our most recent bounty season, we recognized two innovative solutions that enhance Shazam with privacy features.

Shazam's algorithm, detailed in a 2003 paper, involves users sending queries to its servers for matching in its central database—a prime scenario for applying FHE to protect user data. We challenged bounty participants to create a Shazam-esque app using FHE, allowing for any machine learning technique or the method outlined in Shazam's original paper.

Both of the winning solutions structured their work into two steps:

- Song signature extraction on cleartext songs, performed on the client side

- Look-up of the encrypted signatures in the music database on the server side

The winning solution (1st place) is based on a custom implementation using machine learning models, and matches a song against a 1000-song database in less than half a second, while providing a client/server application based on the Concrete ML classifiers.

The second solution (2nd place) reproduces the Shazam paper implementation while leveraging the power of Zama’s Concrete compiler.

Designing a privacy-preserving Shazam

First step: extract the audio features

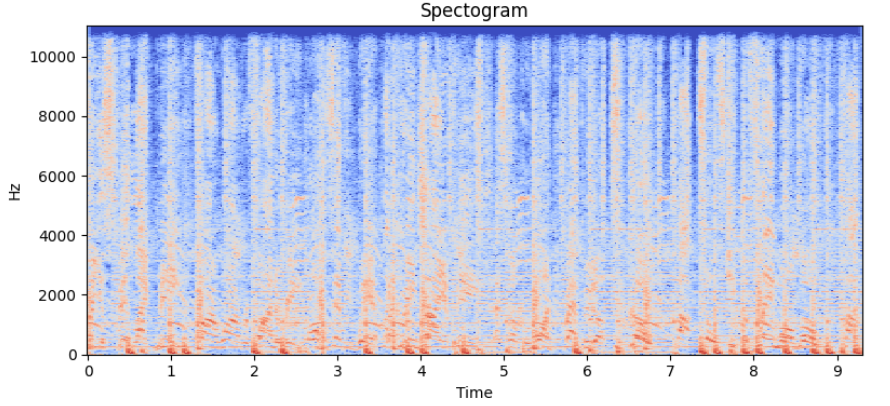

Spectrograms are a popular approach to extracting features from raw audio. Spectrograms are created by applying the Fourier transform to overlapping segments of an audio track. They are resilient to noise and audio compression artifacts, common in recordings made by phones in noisy environments. Furthermore, by normalizing the amplitude spectrum it is possible to add invariance to audio volume.

Second step: convert the original Shazam algorithm to FHE

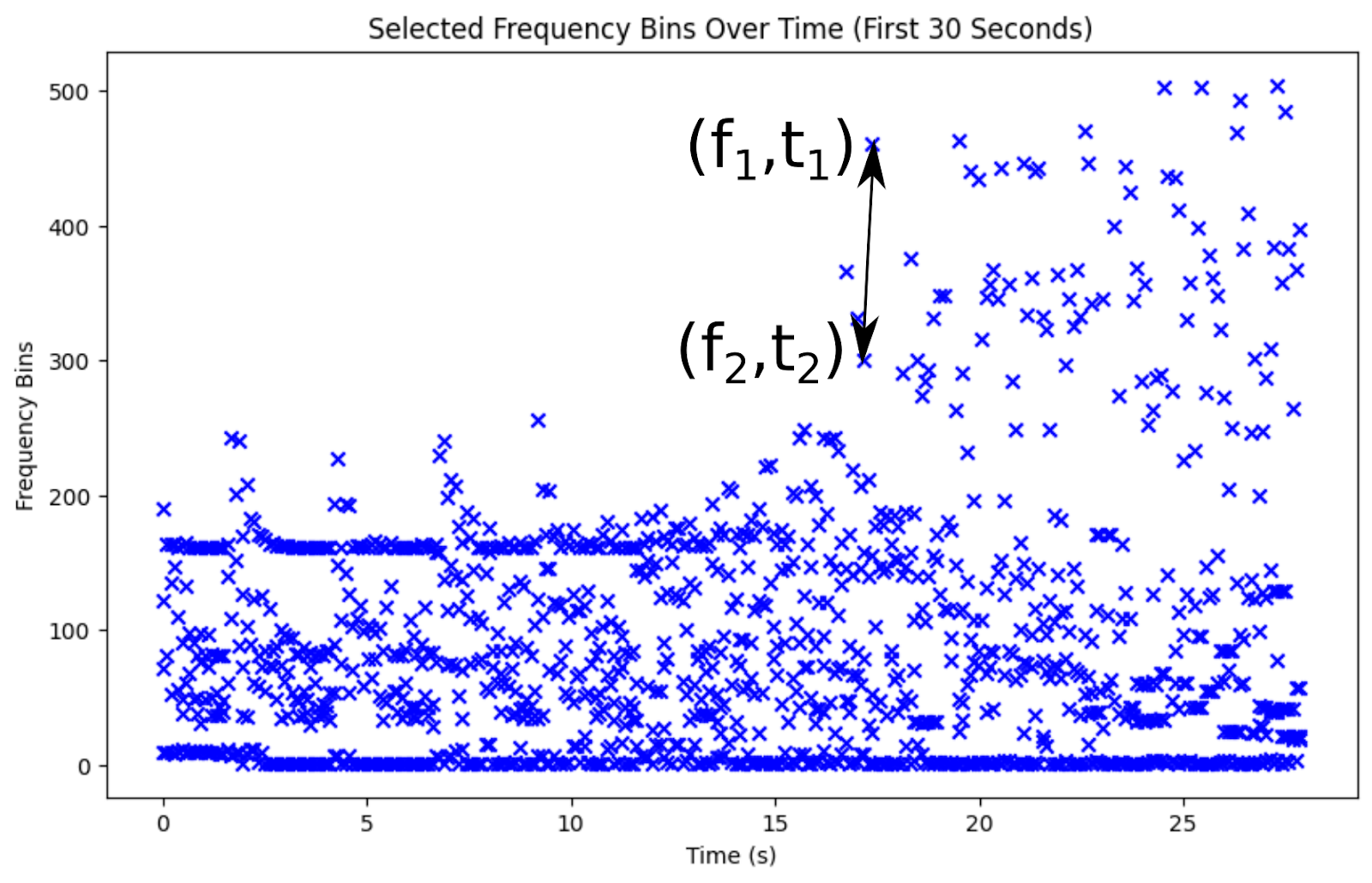

The original Shazam algorithm utilizes spectrograms, identifying local maxima within these and recording their time and frequency of occurrence.

Peaks in the spectrogram are key, yet identifying individual songs from billions demands even greater specificity. The Shazam paper highlights the use of peak pairs, close in time, to distinguish songs from brief recordings. A collection of such peak pairs, marking high-amplitude peaks with their frequencies and the time gap between them, uniquely identifies a song. Encoding frequencies on 10 bits and the time delta on 12 bits results in a 32-bit number which can be considered as a hash of the song.

The second prize-winning solution generates hashes from cleartext spectrograms, later encrypting these hashes for server transmission. To adapt, the precision of time deltas is scaled down to 8 bits, yielding more compact 28-bit hashes.

Identifying a song in the database now relies on counting the matching 28-bit hashes between the query song and each song of the database. Following Shazam's method, a sorted index is employed to compile a list of likely matching songs, with a final decision made based on the timing of these matches.

However, in an FHE-based implementation, a sorted index is not feasible since branching execution cannot occur with encrypted values. The second prize solution circumvented this by comparing the query hashes against all database hashes directly. For every song in the database, it tallied the matches, ultimately returning a list detailing the match count for each song.

Matching hashes involves an equality comparison. And that is where the TFHE scheme used by Zama stands out, as it allows exact comparisons thanks to the Programmable Bootstrapping (PBS). The cost of a PBS varies depending on the input precision. To optimize its solution, the second-prize winner divides each hash into 4-bit values. Consequently, comparing two 28-bit hashes transforms into a quicker series of equality checks across 7 4-bit chunks, followed by a summation and an additional test to verify all chunks match. This process demands 8 4-bit PBS operations per hash, with each song comprising 25 hashes.

The matching process for each song in the database takes approximately 3 seconds on a modern 8-core machine, with this model achieving an accuracy of 97%.

Spotlight on the winner: the classification-based approach

The winner of the challenge looked at the problem in a different way. They also based their work on spectrograms but stopped short of extracting ad-hoc features from them. They cut up the song in half-second windows, generated spectrograms for each, and extracted Mel-frequency cepstral coefficients (MFCC) from these. The coefficients extracted from 15-second segments of a song are compiled in a single feature vector

For each song in the database, a few dozen feature vectors are extracted and assigned the song's ID as their label. Following this, a multi-class logistic regression model is trained using Concrete ML, designed to classify each feature vector and match it to its corresponding song ID.

The linear models in Concrete ML operate without the need for programmable bootstrapping, making them significantly faster than methods that require bootstrapping. This efficiency enables the model to compare a query song against the entire database in less than half a second, all while maintaining a 95% accuracy rate.

Conclusion

We are very thrilled by the innovative approaches these two winning solutions used to tackle this challenge. Both participants demonstrated a creative use of the Concrete libraries, at both the machine learning level and in the cryptographic implementations. Once again, the developers have shown us that FHE can be a cornerstone for privacy protection, and that it has the power to enhance privacy in the popular apps used by millions.

Additional links

- The official Bounty Program and Grant Program Github repository (Don't forget to star it ⭐️).

- Our community channels, for support and more.

- Zama on Twitter.

.svg)

.png)